Appendix A – Forms

Apprenticeship Instructor Approval Letter

CCP Registered Apprenticeship Program

Instructor Credentialing Statement

To: Academic Deans’ Council

Re: Instructor ________________________

Registered Apprenticeship Program: ____________________

Date: ____________

This instructor has met the Registered Apprenticeship Program Standards of Journeyman as defined by Section 446.021(4) Florida State Statute, which states “a person working in an apprenticeable occupation who has successfully completed a registered apprenticeship program, or who has worked the number of years required by established industry practices for the particular trade of the occupation.”

The instructor has been screened and qualifies (approved) by the Registered Apprenticeship Program Committee to teach all levels of registered apprenticeship classes both on-the-job training (OJT) and related instruction necessary for program completion.

Authorized by: _________________________________________________

Committee Chairperson, Registered Apprenticeship Program

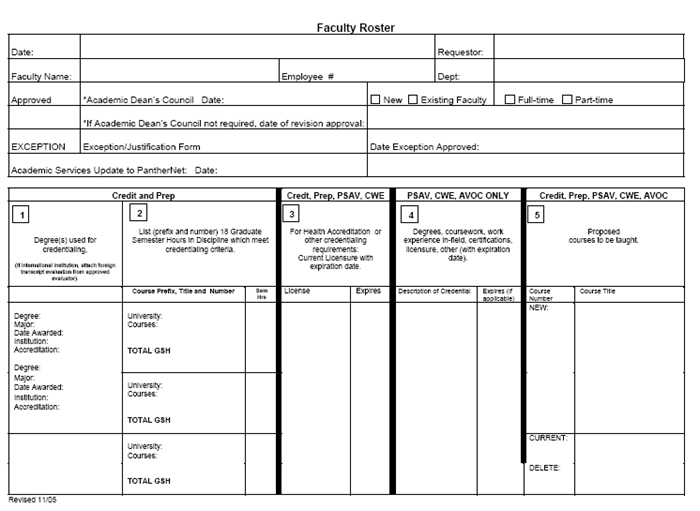

Faculty Roster Form

(for review of full-time faculty candidates prior to interview)

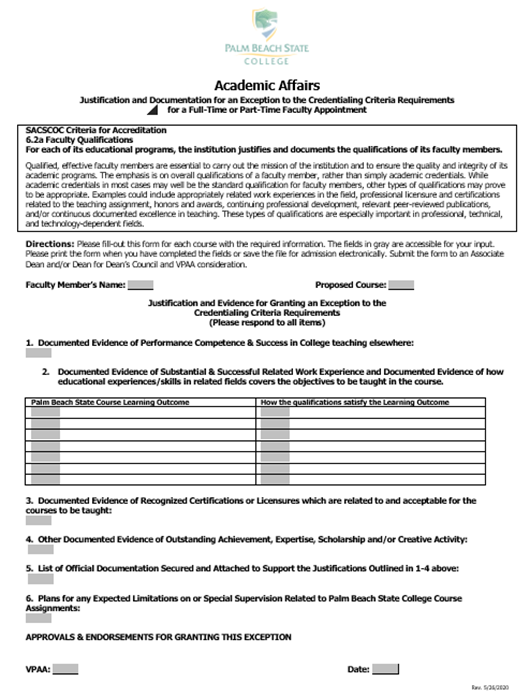

Justification for Exception to Credentialing Criteria Form

Print Form

Appendix B - Mathematics Courses – Nova Southeastern University

The following courses are approved to satisfy the 18 GSH for teaching mathematics.

MAT 505 Geometry for Mathematics Teachers (3 Cr)

This course is designed to offer a wide perspective on geometry for graduate students planning to teach secondary mathematics. The course covers both Euclidean and non-Euclidean geometry.

MAT 507 or MAT 681 Linear and Abstract Algebra (3 Cr)

This course provides theory and computational practice with Linear Algebra, as well as a theoretical foundation for Abstract Algebra structures such as rings, fields, and groups. Students will create two portfolios of notes, activities, and exercises: one for Abstract Algebra, and one for Linear Algebra. Prerequisite/s: College Algebra and MAT 0503.

MAT 514 Topics in Algebra and Geometry (3 Cr)

This course covers concepts in number theory, the real number system as well as algebra and geometry. The emphasis is on algebraic models (linear, quadratic, and exponential) and their applications. Additionally, this course will serve students well as a foundation course leading to further study in more advanced topics in algebra, geometry, trigonometry and calculus.

MAT 515 Probability and Statistics (3 Cr)

This course is designed to give students an introduction to probability and statistics with a focus on problem solving. The course includes set theory, Venn diagrams, combinations and permutations, probability, and expected value and concludes with a unit in descriptive statistics and normal distributions. A class project requires students to think critically as well as apply the concepts learning in the course.

MAT 516 Elements of Differential Calculus (3 Cr)

Topics include Limits, Continuity, Definition of the Derivative, Rules of Differentiation, Implicit Differentiation, Applications of the Derivative; Curve Sketching, Related Rates, and Optimization problems.

MAT 517 Elements of Integral Calculus (3 Cr)

The second part of the two-course calculus sequence MAT516/MAT517. Topics include differentiation and applications of exponential and logarithmic functions, indefinite integrals via the anti-derivatives, definite integrals, calculating area using Riemann sums and the Fundamental Theorem of Calculus, properties of the definite integral, integration by substitution, integration by parts, use of integration tables, additional applications of the definite integral to probability theory, volumes of revolution, and separations of variables.

MAT 591 Calculus for Teachers I (3 Cr)

This course is a proof-based "epsilon-delta" calculus, ranging from limits and cluster points to differentiation. Students will solve standard calculus problems computationally and theoretically. Students in this course should have completed College Algebra and Trigonometry, or equivalent courses. Prerequisite/s: MAT 0503

MAT 592 Calculus for Teachers II (3 Cr)

This course is a proof-based "epsilon-delta" calculus, ranging from integral calculus to sequences and series, and cluster points to differentiation. Students will solve standard calculus problems computationally and theoretically. Students in this course should have completed Calculus I or an equivalent course. Prerequisite/s: MAT 0592.

MAT 685 Symbolic Representation and Number Theory in Mathematics (3 Cr)

This course will focus on notational systems, number theory, and the rationale behind them. The increasing use of manipulative and kinesthetic learning will also be addressed. This course is designed to include significant number theory preparation for students wishing to earn initial certification. Prerequisite/s: College algebra and MAT 0503.

MAT 689 Probability and Statistics in Mathematics Education (3 Cr)

This course offers preparation in probability and statistics for the secondary mathematics teacher, as well as for teachers pursuing their initial teacher certification. Also, students will gather and analyze statistics in educational research.

Appendix C - Guidelines for Credentialing Science Faculty

The following guidelines represent discipline areas for examining transcripts of applicants in selected courses as noted below.

All of the courses should be at the graduate level.

BSC 1005/L:

Possible discipline areas on the transcript: Zoology, Botany, Microbiology, Genetics, Cell Biology, Molecular Biology.

BSC 1010/L:

Possible discipline areas on the transcript: Zoology, Botany, Microbiology, Genetics, Biochemistry, Cell Biology, and Evolution.

BSC 1011/L:

Possible discipline areas on the transcript: Zoology, Botany, Microbiology, Genetics, Cell Biology, Molecular Biology, Vertebrate Zoology or Comparative Anatomy, Invertebrate Zoology.

ZOO 2303:

Possible discipline areas on the transcript: Zoology, Botany, Microbiology, Genetics, Cell Biology, Molecular Biology, Ichthyology, Ornithology, Mammalogy, or Herpetology.

BSC 2085/L, BSC 2086/L:

Possible discipline areas on the transcript: Comparative Anatomy or Vertebrate Zoology, Animal Physiology and Biochemistry, plus at least two of the following, Human Embryology, Neurobiology, Endocrinology, Cytology or Cell Biology, Histology, or Cell Physiology, Cancer Biology.

MCB 2010/L:

Possible discipline areas on the transcript: Immunology, Parasitology, Bacteriology, Medical Bacteriology, Virology, Advanced Virology, Endocrinology, Cytology, Ultra-structure.

BSC 1050/L:

Possible discipline areas on the transcript: Zoology, Botany, Microbiology, Genetics, and at least one graduate level class in Ecology.

Appendix D - Position Statement from HAPS on Human Anatomy and Physiology Society

Approved November 19, 2005

Position Statement on Accreditation of Faculty in 2-semester Human Anatomy and Physiology Courses

HAPS • 8816 Manchester, Suite 314 • St. Louis, MO 63105

1-800-448-4277 • www.hapsweb.org

Background:

The 2-semester/3-quarter undergraduate course usually known as "Human Anatomy and Physiology" or simply "Anatomy and Physiology" is a large introductory course that may be offered by the Biology, Zoology, or Natural Sciences departments. Nationwide, roughly two-thirds of these courses are taught at community colleges, and the rest primarily at universities. This is one of the larger introductory level courses, with approximately 450,000 students enrolled each year in the US and Canada.

The majority of students taking the 2-semester A&P course are planning a career in the health sciences. The career paths include nursing, occupational therapy, physical therapy, radiation technology, laboratory/medical technology, dental hygiene, pharmacology and other related disciplines. Students majoring in physical education, sports training, or kinesiology make up a smaller component of the classroom population. At community colleges the course is often taken in the first year of college, without college-level prerequisites. Entry into any one of the career programs listed above is contingent upon successful completion of the A&P course with a grade of ‘C’ or above; in competitive programs, the grade requirements may be much more restrictive.

It is important to note that pre-medical students and biology majors do not typically take this course because it is an option that does not count towards their degree requirements. Instead, they will take Majors Biology (or Zoology/Botany) as freshmen, followed by more specialized and detailed upper level courses in their junior and senior years. However, the distinction between biology majors and allied health majors is an administrative convenience and not an indication that A&P is anything other than an integrative biological science.

Anatomy and Physiology as a Biological Science

As an introductory level survey course, A&P covers a diversity of topics, including not only anatomy and physiology, but introductory biochemistry, cytology, histology, molecular biology, genetics, immunology, nutrition, embryology, and pathology. The coverage is so diverse, and the principles so relevant to a general understanding of modern biology, that a 1-semester version of this course is often used to satisfy the general biology requirements for non-major students.

Two-semester A&P courses are usually taught from the Biology, Zoology, or Natural Sciences departments; in rare cases, the sequence may be offered by another academic division (e.g. a department within an associated medical school) as a service course. As a result, the diversity of faculty roughly approaches the diversity of topics presented within the course.

The Need for Standardization of Criteria for the Selection and Accreditation of Faculty:

Faculty qualification standards that are too lax make it difficult for the assigned faculty to teach the core curriculum topics, whereas standards that are too restrictive negatively impact both faculty and students. It is in the best interests of all parties to use a standardized set of criteria when evaluating the faculty of anatomy and physiology courses during an accreditation review. For example, at one college the application of overly restrictive standards, which refused to accept comparative, vertebrate, mammalian, or clinical courses among the relevant course credits required for faculty qualification, led to the dismissal of faculty and the elimination of associated A&P and Microbiology courses from the curriculum.

It is our position that Human Anatomy and Physiology is a subset of biology, and the course has extensive overlap with other biological sciences. Opportunities to obtain an M.S. or Ph.D. degree in “human anatomy and physiology” are extremely limited, but there is so much duplication of coverage among courses in modern biology that such specialization is unnecessary. Because the basic anatomical, physiological, histological, and developmental patterns are found across the vertebrate lineage (and often across the major animal phyla), a great diversity of biology courses are directly applicable to human A&P. We also believe that any evaluation of current or potential instructors should consider graduate and postgraduate teaching experience in courses related to anatomy and physiology toward satisfaction of minimum criteria.

HAPS has developed accreditation standards based on a survey of successful anatomy and physiology courses nationwide. We feel that these criteria are sufficient to demonstrate that an instructor is competent to teach a 2-semester anatomy and physiology course. We are therefore encouraging all accreditation agencies and college administrations to use these criteria when evaluating courses or prospective faculty.

The HAPS Standards for Instructors in Anatomy and Physiology:

The HAPS minimum criteria for teaching the introductory level A&P course are (1) a Master’s degree in one of the biological sciences and (2) 18 related credit hours as defined below. A professional degree (M.S.N., M.D., D.O., D.C. D.V.M., or other advanced clinical degrees awarded by nationally accredited institutions) may be accepted as fulfilling the degree requirements.

Instructors must be prepared to integrate introductory level chemistry and biochemistry not only with anatomy and physiology, but with a variety of other relevant topics in biology, including cytology, cell physiology, histology, organology, microbiology, immunology, embryology, and nutrition. Because of the interrelatedness of topics in biology, a course in any one of the topics above must of necessity include a significant amount of anatomy and physiology. Using the HAPS Curriculum Guidelines as a reference, Attachment 1 lists courses that are relevant to the teaching of anatomy and physiology at the introductory level. This list is intended as a reference and a guide, not as a comprehensive or exclusionary listing of applicable courses.

The 18 related credit hours can be accumulated through a combination of (1) undergraduate and graduate course work, (2) teaching experience as a graduate teaching assistant or as graduate or postgraduate faculty in A&P courses, (3) postgraduate course work in human anatomy and physiology, including continuing education credits, (4) research in the field of A&P as evidenced by publication in peer-reviewed journals.

Credits should be calculated as follows:

- for coursework: the credits awarded on the relevant student transcripts or continuing education certificate

- for graduate TA work or as faculty while a graduate student: the credits awarded on the graduate student transcript or the credit value of the course

- for postgraduate teaching: 3 credits for each semester taught

- for continuing education: the CE credits or units awarded for satisfactory completion of coursework in human anatomy and/or physiology

- for research publications: 3 credits for each peer-reviewed journal article

- Any questions regarding this position statement should be directed to the HAPS President and the Board of Directors. A current copy of the Course and Curriculum Guidelines may be downloaded from the HAPS website at http://www.hapsweb.org.

Courses relevant to the teaching of human anatomy and physiology*

Anatomy-related:

- Human anatomy

- Comparative anatomy

- Vertebrate anatomy

- Veterinary anatomy

- Surgical anatomy

- Gross anatomy

- Neuroanatomy

- Physical anthropology

- Kinesiology

- Human embryology

- Comparative embryology

- Vertebrate embryology

- Cytology

- Histology

- Organology

- Pathology

Physiology-related:

- Human physiology

- Animal physiology

- Comparative physiology

- Mammalian physiology

- General physiology

- Medical physiology

- Veterinary physiology

- Pathophysiology

- System physiology (i.e., neurophysiology, cardiovascular physiology, endocrinology, immunology, respiratory physiology, etc.)

- Cell physiology

- Exercise physiology

- Molecular biology

- Genetics

- Ancillary courses of value**:

- Microbiology

- Nutrition

- Biochemistry

- Pharmacology

- Pharmacophysiology

*Note: This list should not be considered comprehensive. It is meant simply to provide an indication of the diversity of topics directly relevant to human anatomy and physiology, as reflected in the HAPS Core Curriculum Guidelines.

**A maximum of 6 credits from this category ay be counted toward the 18-credit requirement.

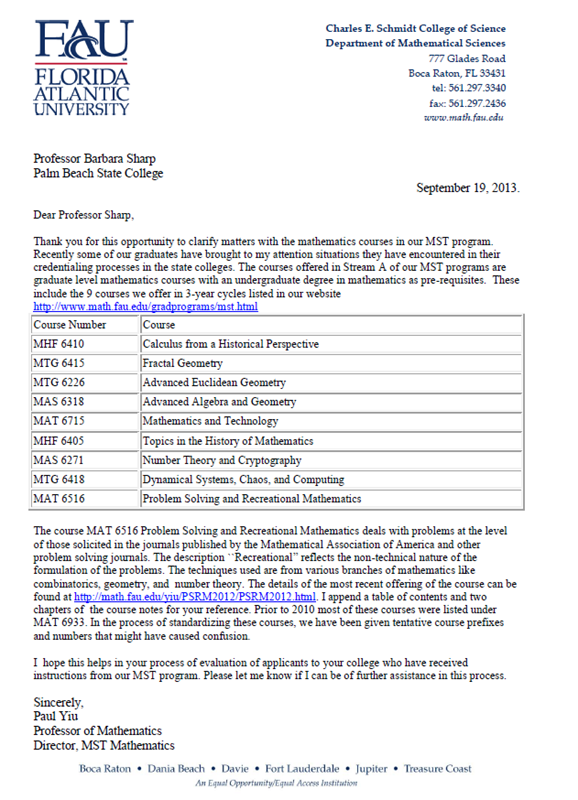

Appendix E - Acceptable Mathematics Courses from Florida Atlantic University’s Master’s Degree in Teaching Mathematics

Note: All Special Topic courses (typically with the number MXX 693X) to be considered for credentialing must provide the course description. These courses are not included on the list below.

| Course Number |

Course Title and Description |

| MAS 6271 |

Number Theory and Cryptography (3 credits)

Elementary number theory with applications to cryptography, including: congruences and modular arithmetic, finite fields, public key cryptography (RSA), primality testing and factoring. |

| MAS 6318 |

Advanced Algebra and Geometry (3 credits)

Prerequisites: MAS 2103 and MAS 4301

Integrative treatment of advanced topics in classical algebra and geometry. Not intended for students in the Ph.D. program in mathematics. |

| MAT 6516 |

Problem Solving and Recreational Mathematics (3 credits)

Prerequisites: MAA 4200 and MAS 4301

Introduction to mathematical problem-solving literature, principles and methods of problem solving, and analysis of selected famous problems in recreational mathematics. Not intended for students in the Ph.D. program in mathematics. |

| MTG 6415 |

Fractal Geometry (3 credits)

Prerequisite: Permission of instructor

Fractal geometry describes the seemingly irregular shapes and patterns we encounter in the natural world. This course explores the mathematical concepts behind fractal geometry and gives numerous applications of integration of mathematics with the natural world. |

| MTG 6226 |

Advanced Euclidean Geometry (3 credits)

Prerequisites: MAS 2103 and MAS 4301

Emphasizes the uses of homogeneous barycentric coordinates in triangle geometry and of dynamic software to explore basic theorems and problems. Not intended for students in the Ph.D. program in mathematics. |

| MTG 6418 |

Dynamical Systems, Chaos, and Computing (3 credits)

Prerequisite: Permission of instructor

Students reconstruct some modern mathematical discoveries in dynamical systems using widely accessible programs such as spreadsheets and dynamical geometry software. Explorations illustrate the relation of chaos theory to iteration of second order polynomials and fractal geometry as well as general mathematical patterns. |

| MHF6410 |

Calculus from a Historical Perspective (3 credits)

Selected topics in calculus from the historical point of view including Archimedes’ quadrature of the parabola, the calculation of Pi, the Bernoulli numbers, and sums of powers of numbers. |

Supplemental Letter from FAU’s MST Program Director

Appendix F - List of Reviewers by Discipline

| DISCIPLINES |

REVIEWER |

| Accounting |

Lancaster, Kim |

| Air Conditioning/HVAC |

Gauthier, Thomas |

| Anthropology |

Roy Vargas |

| Apprenticeship |

Gauthier, Thomas |

| Archaeology |

Caldwell, Susan |

| Architecture |

Roy Vargas |

| Art |

White, Richard |

| Astronomy |

Ramos, Carlos |

| Automotive Service Technology |

Gauthier, Thomas |

| Automation |

Mercer, Becky |

| Banking |

Lancaster, Kim |

| Barbering |

McAllister, Gloria |

| Biology |

Ramos, Carlos |

| Biotechnology |

Mercer, Becky |

| Biotechnology Lab Specialist |

Mercer, Becky |

| Business Administration & Management |

Lancaster, Kim |

| Business Entrepreneurship |

Lancaster, Kim |

| Chemistry |

Ramos, Carlos |

| Community-Based Learning |

Johnson, Jennifer |

| Computer Information Systems |

Lancaster, Kim |

| Cosmetology/Facials/Nails |

Gauthier, Thomas |

| Crime Scene Technology |

Cipriano, Barbara |

| Criminal Justice Institute |

Cipriano, Barbara |

| Criminal Justice Transfer |

Cipriano, Barbara |

| Dental Hygiene/Dental Assisting |

Wiley, Edward |

| Diesel Technology |

Gauthier, Thomas |

| Early Childhood Education/Entry-Level/Certificate Programs |

Caldwell, Susan |

| Economics |

Roy Vargas |

| Education |

Caldwell, Susan |

| Electrical Power Technology |

Mercer, Becky |

| Electrician |

Gauthier, Thomas |

| Emergency Management |

Cipriano, Barbara |

| Emergency Medical Services/Paramedic/EMT |

Cipriano, Barbara |

| Engineering |

Roy Vargas |

| English/English Preparatory (Developmental Education) |

Johnson, Jennifer |

| English for Academic Purposes |

Johnson, Jennifer |

| Environmental Science Technology |

Mercer, Becky |

| Facilities Maintenance Technician |

Gauthier, Thomas |

| Fire Science Technology/Fire Recruit |

Cipriano, Barbara |

| Foreign Language |

Mercer, Becky |

| Geography |

Caldwell, Susan |

| Geology |

Ramos, Carlos |

| Graphic Design Technology |

White, Richard |

| Health/Health & Fitness/Physical Education |

Wiley, Edward |

| Health Information Tech/Health Informatics/Medical Info Coder/Healthcare Doc |

Wiley, Edward |

| Heavy Equipment Service Technician |

McAllister, Gloria |

| History |

Roy Vargas |

| Hospitality Management |

Lancaster, Kim |

| Human Services |

Caldwell, Susan |

| Industrial Management |

Mercer, Becky/Andric, Oleg |

| Insurance |

Lancaster, Kim |

| Interdisciplinary/Honors |

Albertini, Velmarie/Sheila Scott-Lubin |

| Interior Design |

White, Richard |

| Journalism/Mass Communications |

White, Richard |

| Landscape & Horticulture Management |

Mercer, Becky |

| Library Science |

Krull, Rob |

| Machining Technology |

Gauthier, Thomas |

| Massage Therapy |

Roy Vargas |

| Mathematics/Mathematics (Developmental Education) |

Hamadeh, Dana |

| Medical Assisting |

Wiley, Edward |

| Mechatronics |

Mercer, Becky |

| Motion Picture & Television Production |

White, Richard |

| Music |

White, Richard |

| Nursing (AS) |

Copeland, Deborah |

| Nutrition |

Wiley, Edward |

| Oceanography |

Mercer, Becky |

| Office Management Technology |

Lancaster, Kim |

| Office Occupations |

Lancaster, Kim |

| Ophthalmic Medical Technology |

Mercer, Becky |

| Paralegal |

Lebile, Linda |

| Patient Care Assistant |

Wiley, Edward |

| Philosophy |

Roy Vargas |

| Photography |

Roy Vargas |

| Physical Science |

Ramos, Carlos |

| Physics |

Ramos, Carlos |

| Political Science |

Roy Vargas |

| Practical Nursing |

Wiley, Edward |

| Psychology |

Caldwell, Susan |

| Public Safety Telecommunications |

Cipriano, Barbara |

| Radiography |

Wiley, Edward |

| Reading (Developmental Education) |

Johnson, Jennifer |

| Real Estate |

Lancaster, Kim |

| Religion |

Roy Vargas |

| Respiratory Care |

Wiley, Edward |

| Security and Automation Systems Technology |

Gauthier, Thomas |

| Sociology |

Caldwell, Susan |

| Sonography |

Wiley, Edward |

| Speech |

Lebile, Linda |

| Student Development-Strategies/Leadership |

Johnson, Jennifer |

| Supply Chain Management |

Lancaster, Kim |

| Surgical Technology |

Wiley, Edward |

| Sustainable Construction Management |

Gauthier, Thomas |

| Teacher Certification Program (EPI – Educator Preparation Institute) |

Caldwell, Susan |

| Theater |

White, Richard |

| Vocational Preparatory Instruction |

Johnson, Jennifer |

| Welding Technology |

Gauthier, Thomas |

| Bachelor’s Degrees |

|

| Entrepreneurship |

Gladney, Don |

| Cardiopulmonary Sciences |

Gladney, Don |

| Health Management |

Gladney, Don |

| Human Services |

Gladney, Don |

| Information Management |

Gladney, Don |

| Nursing |

Gladney, Don |

| Project Management |

Gladney, Don |

| Supervision & Management |

Gladney, Don |

Created July 2019, Revised September 2020

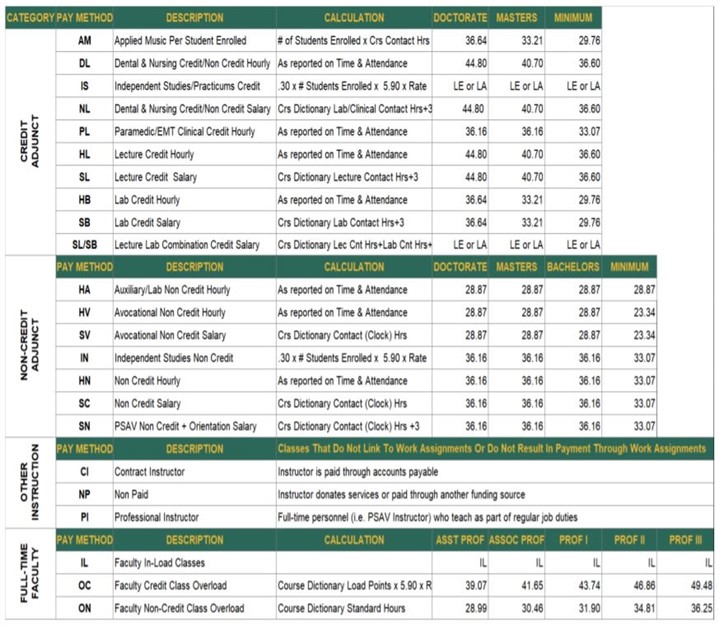

Appendix G - Pay Method Code Table – 2020

July 2020

Appendix H - Policies and Procedures Oversight

| DESCRIPTION |

OFFICE/DEPARTMENT |

| POLICIES |

|

| Academic Affairs Calendar & Final Exam Schedule |

Dean of Academic Affairs, LW campus |

| Academic Checklist |

Associate Deans/Academic Services |

| Academic Progress Standards |

Registrar’s Office, LW campus |

| Academic Records Retention Policy |

Departments/Registrar’s Office (LW) |

| Attendance and the First Day of Class |

Departments/Registrar’s Office (LW) |

| Attendance Statement and Reporting Requirements |

Departments/Registrar’s Office (LW) |

| Block Scheduling Templates |

Academic Services |

| Chart of Programs |

Associate Deans/Academic Services |

| Class Audit Policy |

Registrar’s Office (LW) |

| Class Size Guidelines/Suggested Week Guidelines |

Academic Services Office |

| Cluster Guidelines |

VPAA Office |

| Conversion of Noncredit to Credit |

Academic Services |

| Course Outlines Database |

Academic Services |

| Credit Hour Definition |

VPAA Office |

| District Board of Trustees Policies – Academic Affairs |

VPAA Office |

| Dual Enrollment Guidelines |

Academic Deans/VPAA Office |

| Educational Technology Use |

eLearning Department |

| E-Learning – Faculty Load/E-Pack Policy |

eLearning Department |

| Email Guidelines for Faculty and Instructors |

Information Technology |

| Faculty Observation and Student Assessment Guidelines |

VPAA Office |

| Faculty Office Hours |

VPAA Office |

| Final Course Grade Appeal Policy and Process |

Registrar’s Office, LW campus |

| Foreign Transcript Evaluation |

Academic Services |

| Gordon Rule Policy Statement |

Academic Services |

| Independent Study Definitions and Guidelines |

VPAA Office |

| Lab and Lecture Co-enrollment Policy for Science Classes |

Registrar’s Office |

| Mid-Term Grading |

Academic Services |

| Official Communication with Students |

Registrar’s Office |

| Online Course Equivalency Process |

Academic Services |

| Saturday, Sunday & Holiday Class Scheduling |

Academic Services |

| Student Training in Technology |

eLearning Department |

| Syllabus Posting On-line |

IDT eLearning Department/I.T. |

| Syllabus Template/Simple Syllabus |

IDT eLearning Department/I.T. |

| Testing Center Use Guidelines for E-learning Students |

eLearning Department |

| Textbook Affordability & Certification Procedures |

VPAA Office |

| Textbook Affordability Report |

Academic Services |

| Vendor Gifts |

Auxiliary Services Office |

| Web Grading |

eLearning Department |

| Year-Round Schedule |

VPAA Office |

| |

| PROCEDURES |

|

| Accreditation Report/Information |

Departments/Academic Services |

| Articulation Agreement Procedures |

Academic Services |

| Corporate & Continuing Education/Avocational Course Development |

CCE/Academic Services |

| Continuing Contract Procedures |

VPAA Office |

| Course Special Fee Procedure |

Departments/Academic Services |

| Credentialing Non-Employees |

Departments/Academic Services |

| Curriculum Development |

Departments/Academic Services |

| Faculty Credentialing |

Academic Services |

| Faculty Hiring Procedures |

VPAA Office/Human Resources Office |

| Faculty Meetings |

VPAA Office |

| Field Observation Procedures for Teacher Education |

Department/Academic Services |

| Foreign Language Evaluation for the Baccalaureate Degree |

BAS Program |

| General Education Review Procedure |

Institutional Research & Effectiveness |

| Incomplete Grade Documentation Procedure |

Registrar’s Office, LW campus |

| Instructional Overloads |

VPAA Office |

| Leave for Commencement Procedure |

VPAA Office |

| IRE Recommendation Procedure - New Programs |

Institutional Research & Effectiveness |

| Material Selection Rubric |

Associate Dean (Mathematics), LW campus |

| New Faculty Campus Orientation Procedure |

CTLE/VPAA Office |

| Off-Campus College Activity |

Student Services |

| Pilot Project Procedure & Guidelines |

VPAA Office |

| Prior Learning Assessment |

Departments/Academic Services/Registrar |

| Program Assessment |

Institutional Research & Effectiveness |

| Program Termination Procedure |

Departments/VPAA Office/Academic Services |

| Program Transfer Procedure |

Academic Services |

| Release Time Procedures & Guidelines |

VPAA Office |

| Request for Advancement in Rank – Faculty |

VPAA Office |

| Sabbatical Leave Guidelines and Timeline |

VPAA Office |

July 2019, January 2020, July 2020

Appendix I - General Education Philosophy, Outcomes, and Competencies

Philosophy Statement

The general education program at Palm Beach State College offers an interdisciplinary core curriculum that advances transformative thinking to prepare students for responsible participation in a global community.

Appendix J - Template for Writing Learning Outcomes29

This template may be used to write learning outcomes for a workshop, course, or program.

To use the template, work backwards! Begin with the far-right column. Note that “in here” means in the course, program, or workshop. “Out there” refers to after they leave and for an extended period after they leave. Stiehl (2017)15 even suggests this extended period is for life!

Directions

- Enter answers to the question about the intended outcomes

- Identify assessment tasks.

- List skills that must be mastered to demonstrate the outcome.

- List the concepts and issues that students must understand to demonstrate the outcome.

| Concepts & Issues |

Skills |

Assessment Tasks |

Intended Outcomes |

| What must the students understand to demonstrate the outcome? |

What skills must students master to demonstrate the outcome? |

What will students do “in here” to demonstrate the outcome? |

What do students need to be able to DO “out there” that we are responsible for “in here?” |

|

|

|

|

29Adapted from the

Program/Course-Workshop Outcomes Guide, resource available by Ruth Stiehl (2017) in The New Outcome Primers Series 2.0, which includes six “primers” on outcomes and related topics. Published by The Learning Organization, Corvalis, Oregon. Visit

www.outcomeprimer.com

Appendix K - Assessing the Quality of Intended Outcome Statements

Copyright© 2016 Stiehl & Sours. Limited rights granted to photocopy for instructor and classroom use.

Template: Scoring Guide—Assessing the Quality of Intended Outcome Statements

|

Rating scale: 1=absent 2=minimally met 3=adequately met 4=exceptionally met

|

Characteristics of Good Learning Outcome Statements

|

Suggestions or

Improvements |

| 1. Action |

1 |

2 |

3 |

4 |

|

| All the statements are written in active voice, and the action words have been carefully chosen to describe the intention. |

|

|

|

|

|

| 2. Context |

1 |

2 |

3 |

4 |

|

| All the statements describe what you envision students doing “after” and “outside” this academic experience—because of this experience. |

|

|

|

|

|

| 3. Scope |

1 |

2 |

3 |

4 |

|

| Given the time and resources available, the outcome statements represent reasonable expectations for students. |

|

|

|

|

|

| 4. Complexity |

1 |

2 |

3 |

4 |

|

| The statements, as a whole, have sufficient substance to drive decisions about what students need to learn in this experience. |

|

|

|

|

|

| 5. Brevity and Clarity |

1 |

2 |

3 |

4 |

|

| The language is concise and clear, easily understood by students and stakeholders. |

|

|

|

|

|

Appendix L - Program Learning Outcomes Curriculum Map

When mapping program learning outcomes (PLOs) to curriculum or assessment, be sure to indicate who is doing the mapping (individual faculty names or a department), the name of the program, and the date.

Begin by listing each PLO. Add rows if needed.

| # |

Program Learning Outcome |

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

Continue by following these steps. Examples are provided in the first three rows. Add rows if needed.

- Enter each PLO in the first row.

- Enter the appropriate course (point of instruction) in the first column.

- For each PLO, indicate whether and when the required skills for that outcome are introduced (I) for the first time, reinforced (R), giving the student a chance to practice the skill(s), or emphasized (E) with an expectation of student mastery. Every outcome is not necessarily covered in every course, but every outcome should be covered at some level and assessed at some point in the program.

Point of Instruction

(When are required skills to achieve the PLO covered, and when are student required to demonstrate mastery?) |

CLO # 1:

_______ |

CLO # 2:

_______ |

CLO # 3:

_______ |

CLO # 4:

_______ |

CLO # 5:

_______ |

CLO # 6:

_______ |

| Example: Course 1 |

I |

|

|

I |

I |

|

| Example: Course 2 |

R |

I |

|

E |

R |

|

| Example: Course 3 |

|

R |

|

|

E |

E |

|

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

| |

|

|

|

|

|

|

Appendix M - Course Learning Outcomes Curriculum Map

When mapping course learning outcomes (CLO) to curriculum or assessment, be sure to indicate who is doing the mapping (individual faculty names or a department), the name of the course, and the date.

Begin by listing each CLO. Add rows if needed.

| # |

Course Learning Outcome |

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

Continue by following these steps. Examples are provided in the first three rows. Add rows if needed.

- Enter each CLO in the first row.

- Enter the appropriate point of instruction in the first column.

- For each CLO, indicate whether and when the required skills for that outcome are introduced (I) for the first time, reinforced (R), giving the student a chance to practice the skill(s), or emphasized (E) with an expectation of student mastery. Every outcome is not necessarily covered at every level, but every outcome should be covered at some level and assessed at some point in the course.

Point of Instruction

(When are required skills to achieve the CLO covered? Enter unit, chapter, point in time, etc. in this column.) |

CLO # 1:

_______ |

CLO # 2:

_______ |

CLO # 3:

_______ |

CLO # 4:

_______ |

CLO # 5:

_______ |

CLO # 6:

_______ |

| Example: Ch. 1 |

I |

|

I |

I |

R |

|

| Example: Ch. 2 |

R |

I |

R |

E |

|

|

| Example: Last week in semester |

|

R |

E |

|

E |

E |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Appendix N - Assessment Plan Templates

Program Assessment Plan

Save document to add columns as needed for program learning outcomes (PLOs).

Assessment Plan for [insert program name and code]

Cycle: [insert cycle dates]

|

|

PLO # 1: Enter PLO. |

PLO # 2: |

PLO # 3: |

Measure(s)

(How will the PLO be assessed?) |

Enter the measure, specifying in what class it is administered, and provide any scoring details. |

|

|

Achievement Target(s)

(What is an acceptable performance level?) |

Enter the percent of students expected to meet the standard, and clearly state the standard. |

|

|

| Which, if any, ILO(s) are supported?* |

Enter any institution-level outcome supported by the PLO. |

|

|

Course Assessment Plan

Save document to add columns as needed for course learning outcomes (CLOs).

Assessment Plan for [enter course]

Program: [enter program – general education and the AA degree are also programs for this purpose]

Cycle: [enter cycle dates]

Reporting: [enter instructions to faculty and adjuncts who must report/record their results]

|

|

CLO # 1: Enter CLO. |

CLO # 2: |

CLO # 3: |

Measure(s)

(How will the PLO be assessed?) |

Enter the measure, specifying in what class it is administered, and provide any scoring details. |

|

|

| Achievement Target(s) |

Enter the percent of students expected to meet the standard, and clearly state the standard. |

|

|

| Which, if any, PLO(s) are supported? |

Enter any program-level outcome supported by the PLO (for BAS, BSN, AS, PSAV programs only). |

|

|

| Which, if any, ILO(s) are supported?* |

Enter any institution-level outcome supported by the PLO. |

|

|

*ILO = Institutional Learning Outcome

Appendix O - Assessing the Assessment

Adapted from the “Template: Scoring Guide—Assessing the Quality of Content and Assessment Description.” (available at http://outcomeprimers.com/templates/)

Template: Assessing the Quality of an Assessment Plan

|

Rating scale: 1=absent 2=minimally met 3=adequately met 4=exceptionally met

|

Areas to Assess

|

Suggestions

Improvements |

| 1. Purpose and Alignment |

1 |

2 |

3 |

4 |

|

| Selected assessments purposefully measure an intended outcome. |

|

|

|

|

|

| 2. Content |

1 |

2 |

3 |

4 |

|

| Selected assessments are affirmed by content experts (faculty, staff, or literature) |

|

|

|

|

|

| 3. Accurate Information |

1 |

2 |

3 |

4 |

|

| Selected assessments provide information that is as accurate and valid as possible. |

|

|

|

|

|

| 4. Multiple and Direct Measures |

1 |

2 |

3 |

4 |

|

| The assessment plan includes multiple measure with at least one direct authentic measure of student learning for each learning outcome. |

|

|

|

|

|

| 5. Appropriate Standards |

1 |

2 |

3 |

4 |

|

| Achievement targets are clearly stated and justified by faculty who teach the related content. |

|

|

|

|

|

| 6. Data Collection |

1 |

2 |

3 |

4 |

|

| Data collection processes are explained and appropriate. |

|

|

|

|

|

| 7. Use of Results |

1 |

2 |

3 |

4 |

|

| The plan includes ways to share, discuss, and use the results to improve student or institutional learning. |

|

|

|

|

|

Appendix P - Outcomes and Assessment Evaluation Rubric

Adapted from the Assessment Progress Template (APT) Evaluation Rubric

James Madison University© 2013 Keston H. Fulcher, Donna L. Sundre & Javarro A. Russell

Full version: https://www.jmu.edu/assessment/_files/APT_Rubric_sp2015.pdf

1 – Beginning

|

2 – Developing

|

3 – Good

|

4 – Exemplary

|

| 1. Student-centered learning outcomes |

| Clarity and Specificity |

No outcomes stated.

|

Outcomes present, but with imprecise verbs (e.g., know, understand), vague description of content/skill/or attitudinal domain

|

Outcomes generally contain precise verbs, rich description of the content/skill/or attitudinal domain

|

All outcomes stated with clarity and specificity including precise verbs, rich description of the content/skill/or attitudinal domain

|

2. Course/learning experiences that are mapped to outcomes

|

No activities/ courses listed.

|

Activities/courses listed but link to outcomes is absent.

|

Most outcomes have classes and/or activities linked to them.

|

All outcomes have classes and/or activities linked to them.

|

3. Systematic method for evaluating progress on outcomes

|

| A. Relationship between measures and outcomes |

Seemingly no relationship between outcomes and measures.

|

At a superficial level, it appears the content assessed by the measures matches the outcomes, but no explanation is provided.

|

General detail about how outcomes relate to measures is provided. For example, the faculty wrote items to match the outcomes, or the instrument was selected “because its general description appeared to match our outcomes.”

|

Detail is provided regarding outcome-to-measure match. Specific items on the test are linked to outcomes. The match is affirmed by faculty subject experts (e.g., through a backwards translation).

|

B. Types of Measures

|

No measures indicated

|

Most outcomes assessed primarily via indirect (e.g., surveys) measures.

|

Most outcomes assessed primarily via direct measures.

|

All outcomes assessed using at least one direct measure (e.g., tests, essays).

|

C. Specification of desired results for outcomes

|

No a priori desired results for outcomes

|

Statement of desired result (e.g., student growth, comparison to previous year’s data, comparison to faculty standards, performance vs. a criterion), but no specificity (e.g., students will perform better than last year)

|

Desired result specified. (e.g., student performance will improve by at least 5 points next cycle; at least 80% of students will meet criteria) “Gathering baseline data” is acceptable for this rating.

|

Desired result specified and justified (e.g., Last year the typical student scored 20 points on measure x. Content coverage has been extended, which should improve the average score to at least 22 points.)

|

3. Systematic method for evaluating progress on outcomes (continued)

|

D. Data collection and research design integrity

|

No information is provided about data collection process or data not collected.

|

Limited information is provided about data collection such as who and how many took the assessment, but not enough to judge the veracity of the process (e.g., thirty-five seniors took the test).

|

Enough information is provided to understand the data collection process, such as a description of the sample, testing protocol, testing conditions, and student motivation. Nevertheless, several methodological flaws are evident such as unrepresentative sampling, inappropriate testing conditions, one rater for ratings, or mismatch with specification of desired results.

|

The data collection process is clearly explained and is appropriate to the specification of desired results (e.g., representative sampling, adequate motivation, two or more trained raters for performance assessment, pre-post design to measure gain, cutoff defended for performance vs. a criterion)

|

Appendix Q - Template for Writing an Assessment Report

In most cases, reports must be adjusted for the specific audience, and some type of summary should be a part of the report. However, the following information should always be included as report components. Save table and expand rows as needed.

| Outcome |

Assessment Tool(s) |

Standard(s)

(or Benchmark) |

Achievement Target(s) |

Results (include comparison to previous cycle) |

Improvement Strategies for Next Cycle |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Explanations

- Outcome – Each outcome should be reported separately.

- Assessment tool – This is an assignment, test, project, etc., that is used to measure an outcome. More than one assessment may be used for a given outcome, but every outcome should have a unique assessment or unique assessment items.

- Standard – This is a minimum score, rating, or other unit of achievement that is acceptable for satisfactory performance.

- Achievement target – A target is the minimum percentage of students who are expected to meet the standard on an assessment.

- Report the results as they relate to the achievement target, discuss any findings and related details, and discuss noted trends as they relate to previous cycle(s). For example,

- If the target is that 75% of students will score at least 90 on an exam and 82% do, write, “82% of students achieved a score of 90 or higher.”

- Include the number of students who participated and any other details that may be relevant, such as fewer class meetings due to college closures or lower/higher number of students than usual.

- Note any trends toward increased or decreased performance, providing thoughts on contributing factors.

- Improvement strategies for next cycle – Assessment reports should always include a description of any strategies that are planned as an effort to improve results.